In the time package 2019–2023, Kiblix Festival turns to research and critique of contemporary technological media, as well as their soft applications in contemporary arts, culture and education.

LEVEL OF PRESENCE

Lesson ∞: virtual, augmented and mixed reality

With every technological medium, the conditio sine qua non is the technology that functions flawlessly – because during the conceptualization, and during the stage when technical demands meet content, or in other words, during the implementation of ideas and concepts in creative labs, things always get complicated due to a lack of effective technological support. Under the auspices of big corporations, there is a deliberate and systematic support of an increasingly fast-growing development of major technological breakthroughs, while a constant optimization of technology is the result of countless studies and focused work, in order to offer to the market hardware and software for creating new visions and products for diverse users.

According to a recent estimate by Goldman Sachs, AR and VR are expected to grow into a $95 billion global market value by 2025. The strongest demand for the technologies currently comes from industries in the creative economy – specifically, gaming, live events, video entertainment and retail – but will find wider applications in industries as diverse as healthcare, education, the military, real estate, cultural heritage, and the arts.

Art medium, yes or no? On the one hand, it is about mapping, about the imitation of an artwork, about gallery setups, archiving, documenting, historicizing. On the other hand, it is about the medium as art, which is not necessarily image-forming, but can explore the medium as such, together with its boundaries; i.e., a creative upgrade of the medium, a creative use of the medium. As long as one is not familiar with the medium in terms of the processes and procedures it requires, one cannot manipulate it, be creative with it, or think about it critically – except in terms of the everyday effects and social impacts, which we observe in the sense of dehumanization of technology, or hard digitalization.

Preserving the past and human collective memories is one of the objectives of creative industries. Virtual cultural heritage apps create history by inviting users to travel back in time. One of the more popular ways to increase the immersion of travelers through time in the virtual space is through interactive storytelling that allows users to learn while exploring.

While the process of developing interactive digital storytelling applications is still a complex one, technology options have been linked to content production through the involvement of professionals from different artistic and scientific disciplines. Technological systems are capable of capturing hundreds of thousands of points per second, creating a highly accurate representation of artifacts and entire cultural and historical sites. On servers, data creates high-resolution point clouds with a density that reaches up to several hundred points per square meter. Due to a lack of topology and the large amount of data and noise, new data processes, storage and management approaches will be required before this data can really become useful.

The world of Virtual Reality (VR), Augmented Reality (AR) and Mixed Reality (MR) is rising exponentially. It can be confusing to know where to begin, because the three types of experiences seem to overlap at times, making it difficult to understand the similarities and differences. Each experience requires a slightly different development stack and tools, and in some cases necessitates targeting the specific display the observer is using.

Devices employing augmented and virtual reality define two spectrums of immersive technology that could replace mobile computing. A range of major products came to market in 2016 from companies including Oculus VR, Sony and Google. Since it bought Oculus for $2.1 billion, Facebook has acquired a further 11 AR and VR companies, underscoring the company’s view that AR and VR will form the next frontier. The large investments and acquisitions by tech giants suggest that these technologies will become increasingly integrated with the platforms on which we consume content.

What distinguishes VR from adjacent technologies is the level of immersion it promises. When VR users look around their view of that world adjusts the same way it would if they were looking or moving in real reality. The key buzzword here is presence, shorthand for technology and content that can trick the brain into believing it is somewhere it’s not. When you flinch at a virtual dinosaur, or don’t want to step off an imaginary ledge, that’s presence at work. HTC Vive, Oculus Rift and Google Cardboard are examples of this type of immersion.

Augmented reality (AR) takes your view of the real world and adds digital information and/or data on top of it. This might be as simple as numbers or text notifications, or as complex as a simulated screen. But in general, AR lets you see both synthetic light as well as natural light bouncing off objects in the real world. Pokemon Go is a game that fits this category based on the game's characters located at certain points on maps; however, the character, when found, is not tethered in that one spot as it moves around as your phone moves.

Mixed reality would give the object a "tethered" characteristic. Google Glass is the example of this type of augmentation. MR is the merging of real and virtual worlds to produce new environments and visualizations where physical and digital objects co-exist and interact in real time. The primary headset for MR today is the Microsoft HoloLens. Mixed reality takes place not only in the physical world or the virtual world, but is a mix of reality and virtual reality. It anchors those virtual objects to a point in real space, making it possible to treat them as "real," at least from the perspective of the person who can see the MR experience.

AR has already begun to enter the public consciousness. What began as a niche is finally accelerating in its journey towards popular use. From film studios and games developers to global brands and advertising agencies, developers are creating more and more sophisticated, immersive experiences to captivate and emotionally engage audiences. In its latest global forecast, CCS Insight predicts sales of dedicated VR headsets to grow to 22 million dollars by 2021 – an 800 percent increase versus 2017. The same report claims sales of smart phone headsets, such as Samsung’s Gear VR, will grow five-fold to 70 million dollars during the same period. Despite the success of 360-degree video content to date, the entertainment industry has encountered numerous problems in achieving true immersion within those experiences.

The essence of VR is to create a true sense of presence by making the viewer believe they’re truly inside the virtual world. But while every technological advancement brings us a step closer, there remain a number of hurdles to overcome. Most VR experiences are designed to be viewed on a headset strapped around your eyes like goggles. Stereoscopic 360° video adds depth in a similar way to stereo 3D movies but, while the experience is compelling, the depth is only on the horizon and the illusion breaks as soon as you start to shift your head – the world moves with your head, rather than your head moving within the world. This is one of the reasons VR in particular has a reputation for making people feel sick.

Solving the limitations of 360° video is the development of volumetric video and positional VR. Companies like Lytro with its light-field Immerge camera are carving a new path in immersive content. The Immerge camera records the depth and distance of objects in an environment. Then, rather than stitching images together like a traditional 360-degree camera, it effectively recreates the scene in a 3D virtual space. By capturing information on all light passing into the camera sensor, it’s possible to move around inside a scene, even looking under or behind objects, creating a true sense of presence. This has been termed ‘six degrees of freedom’ in VR circles.

The experience is also impacted by the current field-of-view offered by first- and second-generation headsets. Our binocular vision makes the human field-of-view around 200° horizontally, but most headsets give a measly 110° – just over half of what we see in reality. We are also still a long way off creating experiences of as high a resolution as we see with the human eye; it is immediately evident we are watching via a screen.

A hologram phone, such as the Android released in the first quarter of 2018, the Hydrogen One, is a phone screen, a hydrogen holographic display capable of showing 3D holographic content without special glasses. It uses "multi-view" or "4-view" display technology that replaces the 3D "2-view" approach. It will soon become modular with accessories that will even allow the recording of holographic images.

The Tango system involves advanced hardware for rapidly mapping an indoor environment, which is ideal for AR applications, has been on the market for a few years now, and is supported by two devices, although by an insignificant percentage of the Android market. Tango phones serve as a guidebook to the future of smart phones. Because of AR, smart phones will need special sensors and massive processing power. AR is on the rise, it is trending, and soon we will start choosing phones based on AR capabilities (much like we now choose them based on camera quality). This prediction applies to enterprise employees in BYOD (bring-your-own-device) environments, too. Just as in-house apps and back-end systems developers can count on BYOD devices sporting camera electronics, they will soon count on them to do advanced AR.

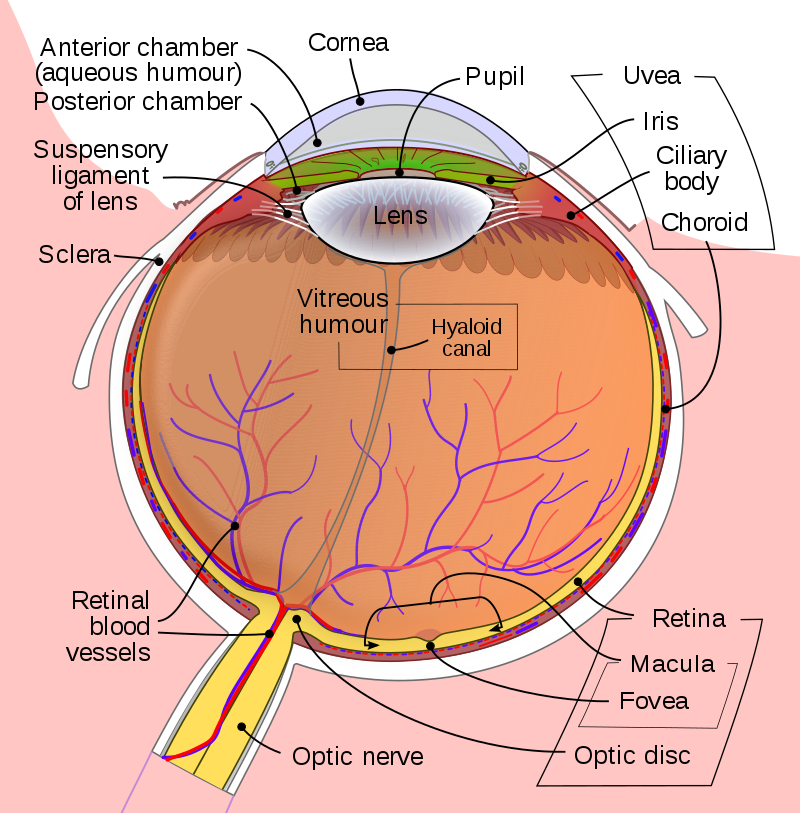

Latency, too, can undo the illusion. Even the tiniest delay in the display reacting to a movement or command dissolves any sense of reality. To achieve full immersion, headsets need to drastically improve by the current field of view (FOV), resolution and latency. Foveated rendering is another emerging technology making waves in VR. Foveated rendering is a rendering technique which uses an eye tracker integrated with a virtual reality headset to reduce the rendering workload by greatly reducing the image quality in the peripheral vision (outside of the zone gazed by the fovea). The image rendering technique, which mimics the way humans focus on and process the world around them, uses gaze detection to tell the VR application where the user is looking and therefore which area of the view to construct in high definition.

Just as the human eye only focuses on a small window of the world around us at any one time, foveated rendering draws the rest of our FOV at lower resolutions. As well as saving an enormous amount of pixel data, the technology better replicates how we truly see the world, creating a deeper, more immersive experience.

We have a way to go before we reach true immersion in VR and AR content, with major developments in both software and hardware still to be made.